Artificial Intelligence in Emotion Recognition

Emotions serve as a source of information to perceive how a person is reacting to a particular scenario. Recognizing emotions can help us take actions for getting desired outcomes. Humans use a variety of indicators such as facial expression, voice modularity, speech content, body language, and historical context to gauge the emotions of others.

Emotion recognition using AI is a relatively new field. It refers to identifying human emotions using technology. Generally, this technology works best if it uses multiple modalities to make predictions. To date, most work has been conducted on automating the recognition of facial expressions from video, spoken expressions from audio, written expressions from the text, and physiology as measured by wearable devices.

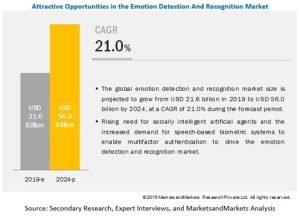

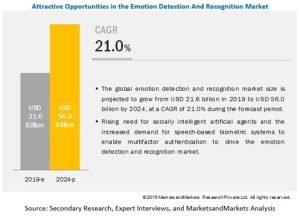

As per the reports by marketsandmarkets [1] published in February 2020 the global Emotion Detection and Recognition Market is expected to grow from USD 21.6 Billion to a staggering USD 56 Billion by 2024. Many technology companies like Amazon, Microsoft have already launched emotion detection tools for predicting emotions with varying accuracy. So, are you ready to utilize this upcoming technology to your business advantage?

This article will take a look at use cases of such technologies, an overview of how it is achieved, and concerns related to this technology.

Emotion Detection and Recognition Market

The ongoing pandemic has led to many day-to-day activities being carried out in online mode. These include online classes, hiring processes, work from home scenarios, etc. This online adoption has led to an increase in the demand for emotion detection and recognition software. The market size for software related to this technology is expected to grow at a Compound Annual Growth Rate (CAGR) of 21.0% till 2024. Factors such as the rising need for socially intelligent artificial agents, increasing demand for speech-based biometric systems to enable multifactor authentication, technological advancements across the globe, and growing need for high operational excellence are expected to work in favor of the market in the near future. Also, the pandemic situation has reinforced the need for such a technology.

The advancement in technologies such as Deep Learning and NLP (Natural Language Processing) have further accelerated the development and adoption of emotion recognition software. Deep Learning uses neural networks to classify images into several classes. For instance, neural networks can be applied on the face to detect whether their expression denotes sad, happy, shock, anger, etc.

Applications Of Emotion Detection and Recognition Technologies

Market Research

FER (Facial Expression Recognition) software can be used in focus groups, beta-testing for product marketing, and other market research activities to find how the customers feel about certain products. Here, the participants have already consented to the use of FER software on them, thus having no legal ramifications. FER technologies have become quite infamous for using data by stealth. This application of FER does not involve any such malpractices.

Another novel experiment in marketing was back in 2015 by M&C Saathchi where advertisement changed based on their people’s facial expressions while passing an AI-Powered poster.

Recruitment

The ongoing coronavirus pandemic has led to the shifting of most in-person interviews to video call interviews. The emotion detection software can analyze expressions such as fear, shock, happiness, neutral, etc. However, this remains a controversial use case of Emotion recognition software as it has the following caveats –

- The AI model used for it might have a racial bias. For example, black men are usually classified as having an “angry” facial expression

- It is not legal to use such technologies in the EU and few other nations

- This usage will be subjected to further regulations.

Deepfake Detection

Deepfakes are AI-generated fake videos from real videos. [3] It takes as input a video of a specific individual (‘target’) and outputs another video with the target’s faces replaced with those of another individual (‘source’). 2020 US election saw a surge in such videos, with politically motivated videos. A research was conducted by Computer Vision Foundation and in partnership with UC Berkley, DARPA and Google which used facial expression recognition to detect deep fakes.

Medical Research in Autism

People who have Autism often find it difficult to make appropriate facial expressions at right time. As far as [4] 39 studies have concluded the same. Most autism-affected people usually remain expressionless or produce facial expressions that are difficult to interpret. Machine Learning can be applied for the early detection of Autism spectrum disorder (ASD), where people who are diagnosed with this disorder have long-term difficulties in evaluating facial expressions.

There are a number of Machine Learning projects and research that were conducted to help people on Autism Spectrum. [5] Stanford university’s Autism Glass project leveraged Haar Cascade for face detection in images. Google’s face worn computing system was then applied to these images to predict emotions. This project aimed at helping autism-affected people by suggesting them appropriate social cues.

[6] Another project used an app for screening subject’s facial expressions in a movie to identify how their expression compared with non-autistic people. The project utilized Tensorflow, PyTorch, and AWS (Amazon Web Services).

There are much more applications of emotion detection technologies that can help people suffering from autism.

Virtual Learning Environment

A number of studies have been conducted using emotion detection technologies to determine how well students understand and perceive what is being thought in an online class.

One of the research based on the same applies neural networks to classify emotions in six kinds of emotional categories [7]. For this, they have used the Haar Cascades method to detect the face on the input image. Using face as the basis, they extract eyes and mouth through Sobel edge detection to obtain characteristic value. Neural networks are then used to classify facial expression in one of the six emotion classes.

How does Emotion Recognition Works?

Emotion Recognition using images

In most emotion recognition software, emotions are usually classified in one of these 7 classes – neutral, happy, sad, surprise, fear, disgust, anger. The first step to any facial expression classifier is to detect faces present in an image or video feed.

The next step is to input the detected faces into the emotion classification model. The classification models usually employ CNNs (Constitutional Neural Networks) to detect various classes of facial expression on the training dataset. Essentially, a CNN is able to apply various filters to generate a feature map of an image which can then be applied to ANNs (Artificial Neural Networks) or any other machine learning algorithm for further classification.

Detecting emotions in audio clips

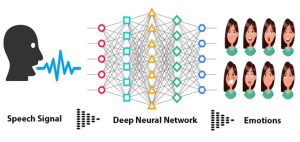

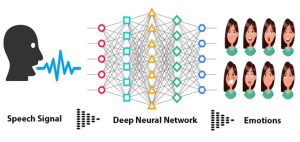

In emotion recognition from audio, different prosody features can be used to capture emotion-specific properties of the speech signal [8]. The features such as pitch, energy, speaking rate, word duration are applied to suitable machine learning models to detect possible emotion.

Another method to detect emotion in audio clips is using Mel-frequency cepstral coefficients (MFCCs) [9] on audio clips and then applying CNN to the input generated using MFCCs. This is so far one of the most famous techniques in emoticon recognition using audio.

Putting it to use to analyze video

Emotion recognition using images and audio is combined using complex mathematical or machine learning models to produce accurate results.

Limitations of Emotion Recognition Technologies

Technical Challenges

Emotion recognition shares a lot of challenges with detecting moving objects in the video: identifying an object, continuous detection, incomplete or unpredictable actions, etc. It might also suffer from lack of context of the conversation, lighting issues for images, and disturbances in form of noise for audio inputs.

Racial Biases

Depending on the datasets used, the Machine Learning models for emotion recognition can have an inherent bias. Even google photos suffered from racial bias, where google photos could not identify dark-skinned people. Emotion recognition often suffers from biases such as classifying black men as angry etc. Thus it is very important to use diverse datasets for emotion detection and recognition software.

Political and Public Scrutiny

Facial recognition and systems built on this technology have often drawn criticism from politicians and people alike. These are usually privacy concerns, and the use of data without a person’s knowledge. European Union (EU) has already banned Facial recognition-based software. More regulations are expected to follow for emotion recognition technologies.

Conclusion

Emotion detection and recognition systems are under constant political scrutiny. In spite of this, the market for these systems are expected to have a compound growth rate of 21%. It is also expected to have a revenue of USD 56 Billion by year 2024.Apart from the outstanding economic projections for emotion detection and recognition software, the use cases of this technology are rather compelling. If hurdles like privacy, laws regulations, racial bias can be overcome this technology can be integrated in various products to enhance the user experience.

Need help with Emotion recognition and detection software?

If you want to build Emotion recognition and detection and need help, advice, or developers, feel free to contact us at contact@quantiantech.com or on our LinkedIn page or visit our company website, www.quantiantech.com

References

[1] “Emotion Detection and Recognition Market,” Market Research Firm. [Online]. Available: https://www.marketsandmarkets.com/Market-Reports/emotion-detection-recognition-market-23376176.html. [Accessed: 02-Mar-2021]

[2] Sackett Catalogue of Bias Collaboration, E. A. Spencer, K. Mahtani. “Hawthorne bias.” Catalogue Of Bias, 2017

[3] Li, Y., & Lyu, S. (2018). Exposing deepfake videos by detecting face warping artifacts. arXiv preprint arXiv:1811.00656.

[4] Trevisan, D. A., Hoskyn, M., & Birmingham, E. (2018). Facial expression production in autism: A meta‐analysis. Autism Research, 11(12), 1586-1601.

[5] Google Glass may help kids with autism – Stanford Children’s Health. [Online]. Available: https://www.stanfordchildrens.org/en/service/brain-and-behavior/google-glass. [Accessed: 02-Mar-2021]

[6] W. I. R. E. D. Insider, “Researchers Are Using Machine Learning to Screen for Autism in Children,” Wired, 23-Oct-2019. [Online]. Available: https://www.wired.com/brandlab/2019/05/researchers-using-machine-learning-screen-autism-children/#:~:text=Studying%20ASD%20at%20an%20Unprecedented,children%20in%20a%20single%20study. [Accessed: 02-Mar-2021]

[7] Yang, D., Alsadoon, A., Prasad, P. C., Singh, A. K., & Elchouemi, A. (2018). An emotion recognition model based on facial recognition in virtual learning environment. Procedia Computer Science, 125, 2-10.

[8] Štruc, V., Dobrišek, S., Žibert, J., Mihelič, F., & Pavešić, N. (2009, September). Combining audio and video for detection of spontaneous emotions. In European Workshop on Biometrics and Identity Management (pp. 114-121). Springer, Berlin, Heidelberg.

[9] R. Chu, “Speech Emotion Recognition with Convolution Neural Network,” Medium, 01-Jun-2019. [Online]. Available: https://towardsdatascience.com/speech-emotion-recognition-with-convolution-neural-network-1e6bb7130ce3. [Accessed: 02-Mar-2021]

Emotions serve as a source of information to perceive how a person is reacting to a particular scenario. Recognizing emotions can help us take actions for getting desired outcomes. Humans use a variety of indicators such as facial expression, voice modularity, speech content, body language, and historical context to gauge the emotions of others.

Emotion recognition using AI is a relatively new field. It refers to identifying human emotions using technology. Generally, this technology works best if it uses multiple modalities to make predictions. To date, most work has been conducted on automating the recognition of facial expressions from video, spoken expressions from audio, written expressions from the text, and physiology as measured by wearable devices.

As per the reports by marketsandmarkets [1] published in February 2020 the global Emotion Detection and Recognition Market is expected to grow from USD 21.6 Billion to a staggering USD 56 Billion by 2024. Many technology companies like Amazon, Microsoft have already launched emotion detection tools for predicting emotions with varying accuracy. So, are you ready to utilize this upcoming technology to your business advantage?

This article will take a look at use cases of such technologies, an overview of how it is achieved, and concerns related to this technology.

Emotion Detection and Recognition Market

The ongoing pandemic has led to many day-to-day activities being carried out in online mode. These include online classes, hiring processes, work from home scenarios, etc. This online adoption has led to an increase in the demand for emotion detection and recognition software. The market size for software related to this technology is expected to grow at a Compound Annual Growth Rate (CAGR) of 21.0% till 2024. Factors such as the rising need for socially intelligent artificial agents, increasing demand for speech-based biometric systems to enable multifactor authentication, technological advancements across the globe, and growing need for high operational excellence are expected to work in favor of the market in the near future. Also, the pandemic situation has reinforced the need for such a technology.

The advancement in technologies such as Deep Learning and NLP (Natural Language Processing) have further accelerated the development and adoption of emotion recognition software. Deep Learning uses neural networks to classify images into several classes. For instance, neural networks can be applied on the face to detect whether their expression denotes sad, happy, shock, anger, etc.

Applications Of Emotion Detection and Recognition Technologies

Market Research

FER (Facial Expression Recognition) software can be used in focus groups, beta-testing for product marketing, and other market research activities to find how the customers feel about certain products. Here, the participants have already consented to the use of FER software on them, thus having no legal ramifications. FER technologies have become quite infamous for using data by stealth. This application of FER does not involve any such malpractices.

Another novel experiment in marketing was back in 2015 by M&C Saathchi where advertisement changed based on their people’s facial expressions while passing an AI-Powered poster.

Recruitment

The ongoing coronavirus pandemic has led to the shifting of most in-person interviews to video call interviews. The emotion detection software can analyze expressions such as fear, shock, happiness, neutral, etc. However, this remains a controversial use case of Emotion recognition software as it has the following caveats –

- The AI model used for it might have a racial bias. For example, black men are usually classified as having an “angry” facial expression

- It is not legal to use such technologies in the EU and few other nations

- This usage will be subjected to further regulations.

Deepfake Detection

Deepfakes are AI-generated fake videos from real videos. [3] It takes as input a video of a specific individual (‘target’) and outputs another video with the target’s faces replaced with those of another individual (‘source’). 2020 US election saw a surge in such videos, with politically motivated videos. A research was conducted by Computer Vision Foundation and in partnership with UC Berkley, DARPA and Google which used facial expression recognition to detect deep fakes.

Medical Research in Autism

People who have Autism often find it difficult to make appropriate facial expressions at right time. As far as [4] 39 studies have concluded the same. Most autism-affected people usually remain expressionless or produce facial expressions that are difficult to interpret. Machine Learning can be applied for the early detection of Autism spectrum disorder (ASD), where people who are diagnosed with this disorder have long-term difficulties in evaluating facial expressions.

There are a number of Machine Learning projects and research that were conducted to help people on Autism Spectrum. [5] Stanford university’s Autism Glass project leveraged Haar Cascade for face detection in images. Google’s face worn computing system was then applied to these images to predict emotions. This project aimed at helping autism-affected people by suggesting them appropriate social cues.

[6] Another project used an app for screening subject’s facial expressions in a movie to identify how their expression compared with non-autistic people. The project utilized Tensorflow, PyTorch, and AWS (Amazon Web Services).

There are much more applications of emotion detection technologies that can help people suffering from autism.

Virtual Learning Environment

A number of studies have been conducted using emotion detection technologies to determine how well students understand and perceive what is being thought in an online class.

One of the research based on the same applies neural networks to classify emotions in six kinds of emotional categories [7]. For this, they have used the Haar Cascades method to detect the face on the input image. Using face as the basis, they extract eyes and mouth through Sobel edge detection to obtain characteristic value. Neural networks are then used to classify facial expression in one of the six emotion classes.

How does Emotion Recognition Works?

Emotion Recognition using images

In most emotion recognition software, emotions are usually classified in one of these 7 classes – neutral, happy, sad, surprise, fear, disgust, anger. The first step to any facial expression classifier is to detect faces present in an image or video feed.

The next step is to input the detected faces into the emotion classification model. The classification models usually employ CNNs (Constitutional Neural Networks) to detect various classes of facial expression on the training dataset. Essentially, a CNN is able to apply various filters to generate a feature map of an image which can then be applied to ANNs (Artificial Neural Networks) or any other machine learning algorithm for further classification.

Detecting emotions in audio clips

In emotion recognition from audio, different prosody features can be used to capture emotion-specific properties of the speech signal [8]. The features such as pitch, energy, speaking rate, word duration are applied to suitable machine learning models to detect possible emotion.

Another method to detect emotion in audio clips is using Mel-frequency cepstral coefficients (MFCCs) [9] on audio clips and then applying CNN to the input generated using MFCCs. This is so far one of the most famous techniques in emoticon recognition using audio.

Putting it to use to analyze video

Emotion recognition using images and audio is combined using complex mathematical or machine learning models to produce accurate results.

Limitations of Emotion Recognition Technologies

Technical Challenges

Emotion recognition shares a lot of challenges with detecting moving objects in the video: identifying an object, continuous detection, incomplete or unpredictable actions, etc. It might also suffer from lack of context of the conversation, lighting issues for images, and disturbances in form of noise for audio inputs.

Racial Biases

Depending on the datasets used, the Machine Learning models for emotion recognition can have an inherent bias. Even google photos suffered from racial bias, where google photos could not identify dark-skinned people. Emotion recognition often suffers from biases such as classifying black men as angry etc. Thus it is very important to use diverse datasets for emotion detection and recognition software.

Political and Public Scrutiny

Facial recognition and systems built on this technology have often drawn criticism from politicians and people alike. These are usually privacy concerns, and the use of data without a person’s knowledge. European Union (EU) has already banned Facial recognition-based software. More regulations are expected to follow for emotion recognition technologies.

Conclusion

Emotion detection and recognition systems are under constant political scrutiny. In spite of this, the market for these systems are expected to have a compound growth rate of 21%. It is also expected to have a revenue of USD 56 Billion by year 2024.Apart from the outstanding economic projections for emotion detection and recognition software, the use cases of this technology are rather compelling. If hurdles like privacy, laws regulations, racial bias can be overcome this technology can be integrated in various products to enhance the user experience.

Need help with Emotion recognition and detection software?

If you want to build Emotion recognition and detection and need help, advice, or developers, feel free to contact us at contact@quantiantech.com or on our LinkedIn page or visit our company website, www.quantiantech.com

References

[1] “Emotion Detection and Recognition Market,” Market Research Firm. [Online]. Available: https://www.marketsandmarkets.com/Market-Reports/emotion-detection-recognition-market-23376176.html. [Accessed: 02-Mar-2021]

[2] Sackett Catalogue of Bias Collaboration, E. A. Spencer, K. Mahtani. “Hawthorne bias.” Catalogue Of Bias, 2017

[3] Li, Y., & Lyu, S. (2018). Exposing deepfake videos by detecting face warping artifacts. arXiv preprint arXiv:1811.00656.

[4] Trevisan, D. A., Hoskyn, M., & Birmingham, E. (2018). Facial expression production in autism: A meta‐analysis. Autism Research, 11(12), 1586-1601.

[5] Google Glass may help kids with autism – Stanford Children’s Health. [Online]. Available: https://www.stanfordchildrens.org/en/service/brain-and-behavior/google-glass. [Accessed: 02-Mar-2021]

[6] W. I. R. E. D. Insider, “Researchers Are Using Machine Learning to Screen for Autism in Children,” Wired, 23-Oct-2019. [Online]. Available: https://www.wired.com/brandlab/2019/05/researchers-using-machine-learning-screen-autism-children/#:~:text=Studying%20ASD%20at%20an%20Unprecedented,children%20in%20a%20single%20study. [Accessed: 02-Mar-2021]

[7] Yang, D., Alsadoon, A., Prasad, P. C., Singh, A. K., & Elchouemi, A. (2018). An emotion recognition model based on facial recognition in virtual learning environment. Procedia Computer Science, 125, 2-10.

[8] Štruc, V., Dobrišek, S., Žibert, J., Mihelič, F., & Pavešić, N. (2009, September). Combining audio and video for detection of spontaneous emotions. In European Workshop on Biometrics and Identity Management (pp. 114-121). Springer, Berlin, Heidelberg.

[9] R. Chu, “Speech Emotion Recognition with Convolution Neural Network,” Medium, 01-Jun-2019. [Online]. Available: https://towardsdatascience.com/speech-emotion-recognition-with-convolution-neural-network-1e6bb7130ce3. [Accessed: 02-Mar-2021]